Known Issues

Cannot access your data in the persistent folder

Sometimes you cannot access anymore the data you put in the persistent folder of your container. It can be due to a node going down, if the persistent volume your pod is connected to is on this node, then it cannot access it anymore.

You can easily fix this issue by restarting the pod of your application, it will make it properly connect to resources on nodes that are up.

To restart the pod, go in topology, click on your application, go to the details tab, and decrease the pod count to 0, then put it back up to 1.

Large volumes

You could run into a following message in the Events tab that looks similar to this

Error: kubelet may be retrying requests that are timing out in CRI-O due to system load. Currently at stage container volume configuration: context deadline exceeded: error reserving ctr name

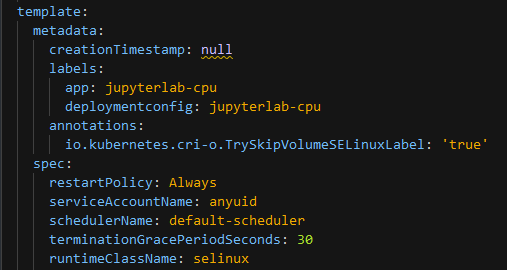

The issue above will occur if you are using a large persistent volume. It can be resolved by adding the following to your Deployment(Config):

spec:

template:

metadata:

annotations:

io.kubernetes.cri-o.TrySkipVolumeSELinuxLabel: 'true'

spec:

runtimeClassName: selinux

Take note of the indentation and the place in the file!

An example of this can be found here:

DockerHub pull limitations

If the Events tab show this error:

--> Scaling filebrowser-case-1 to 1

error: update acceptor rejected my-app-1: pods for rc 'my-project/my-app-1' took longer than 600 seconds to become available

Then check for the application ImageStream in Build > Images, and you might see this for your application image:

Internal error occurred: toomanyrequests: You have reached your pull rate limit.

You may increase the limit by authenticating and upgrading: https://www.docker.com/increase-rate-limit.

You can solve this by creating a secret to login to DockerHub in your project:

oc create secret docker-registry dockerhub-login --docker-server=docker.io --docker-username=dockerhub_username --docker-password=dockerhub_password --docker-email=example@mail.com

Linking the login secret to the default service account:

oc secrets link default dockerhub-login --for=pull

Login to DockerHub should raise the limitations

To definitely solve this issue you can publish the DockerHub image to the GitHub Container Registry.

Follow those instructions on your laptop:

Login to the GitHub Container Registry with

docker login.Pull the docker image from

docker pull myorg/myimage:latestgit@github.com:MaastrichtU-IDS/dsri-documentation.gitgit@github.com:MaastrichtU-IDS/dsri-documentation.gitgit@github.com:MaastrichtU-IDS/dsri-documentation.git

Change its tag

docker tag myorg/myimage:latest ghcr.io/maastrichtu-ids/myimage:latestPush it back to the GitHub Container Registry:

docker push ghcr.io/maastrichtu-ids/myimage:latest

If the image does not exist, GitHub will create automatically when you push it for the first time! You can then head to your organization Packages tab to see the package.

By default new images are set as Private, go to your Package Settings, and click Change Visibility to set it as Public, this avoids the need to login to pull the image.

You can update the image if you want access to the latest version, you can set a GitHub Actions workflow to do so.

Finally you will need to update your DSRI deployment, or template, to use the newly created image on ghcr.io, and redeploy the application with the new template.

How to run function within a container ''in the background'

If the Events tab show this error:

--> cd /usr/local/src/work2/aerius-sample-sequencing/CD4K4ANXX

Trinity --seqType fq --max_memory 100G --CPU 64 --samples_file samples.txt --output /usr/local/src/work2/Trinity_output_zip_090221

error: The function starts but at some points just exits without warnings or errors to Windows folder

DSRI in the container's terminal keep running fine but never finishes. At some point a red label ''disconnected'' appears and the terminal stops and the analysis never continues.

Those two issues are due to the process running attach to the terminal

Should be able to easily run it using the "Bash way": add nohup at the beginning and & at the end

It will run in the back and all output that should have gone to the terminal will go to a file nohup.out in the repo

nohup Trinity --seqType fq --max_memory 100G --CPU 64 --samples_file samples.txt --output /usr/local/src/work2/Trinity_output_zip_090221 &

To check if it is still running:

ps aux | grep Trinity

Be careful make sure the terminal uses bash and not shell ("sh")

To use bash just type bash in the terminal:

bash

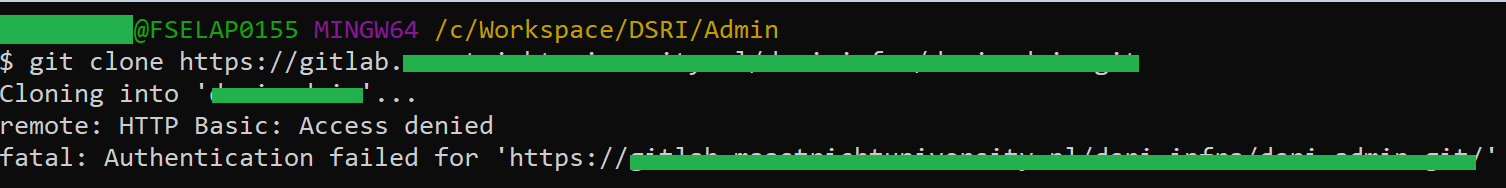

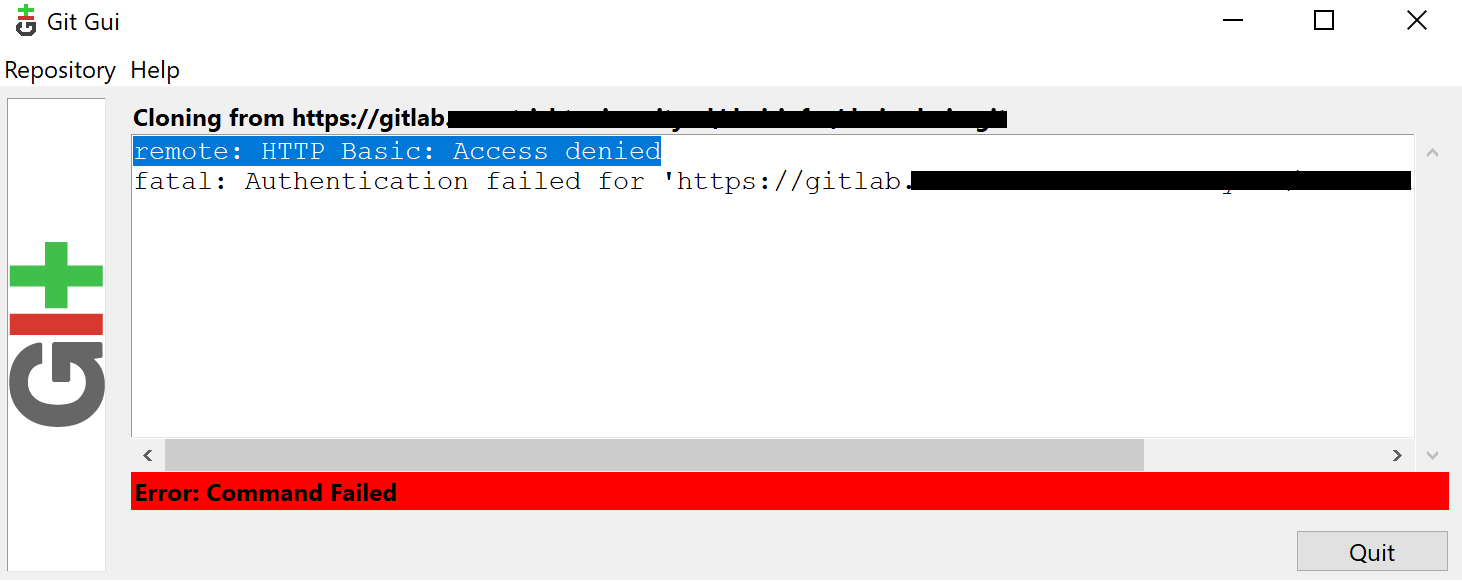

Git authentication issue

⚠️ remote: HTTP Basic: Access denied fatal: Authentication failed for

It happen every time when we forced to change the Windows password.

Apply command from powershell (run as administrator)

git config --system --unset credential.helperAnd then remove gitconfig file from C:\Program Files\Git\mingw64/etc/ location (Note: this path will be different in MAC like "/Users/username")

After that use git command like

git pullorgit push, it asked me for username and password. applying valid username and password and git command working.

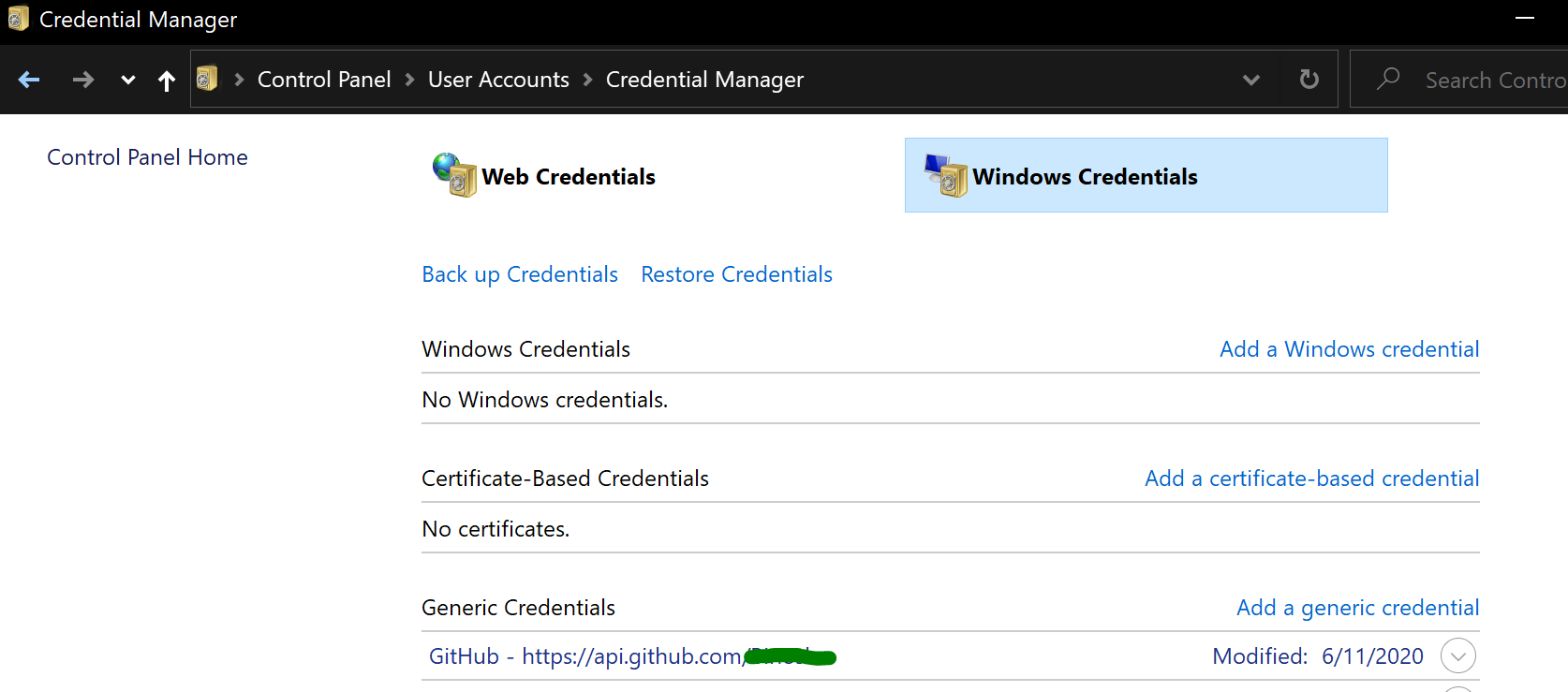

Windows:

- Go to Windows Credential Manager. This is done in a EN-US Windows by pressing the Windows Key and typing 'credential'. In other localized Windows variants you need to use the localized term.

alternatively you can use the shortcut control /name Microsoft.CredentialManager in the run dialog (WIN+R)

- Edit the git entry under Windows Credentials, replacing old password with the new one.

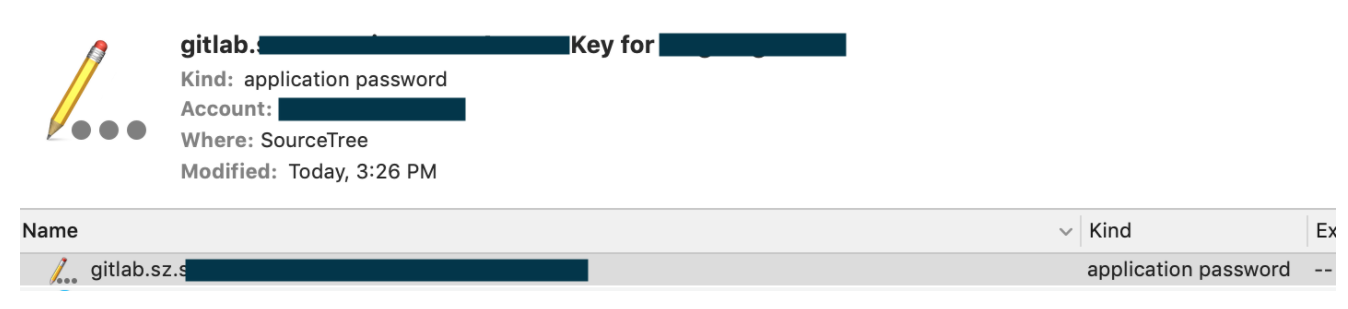

Mac:

cmd+space and type "KeyChain Access",

You should find a key with the name like "gitlab.*.com Access Key for user". You can order by date modified to find it more easily.

- Right click and delete.

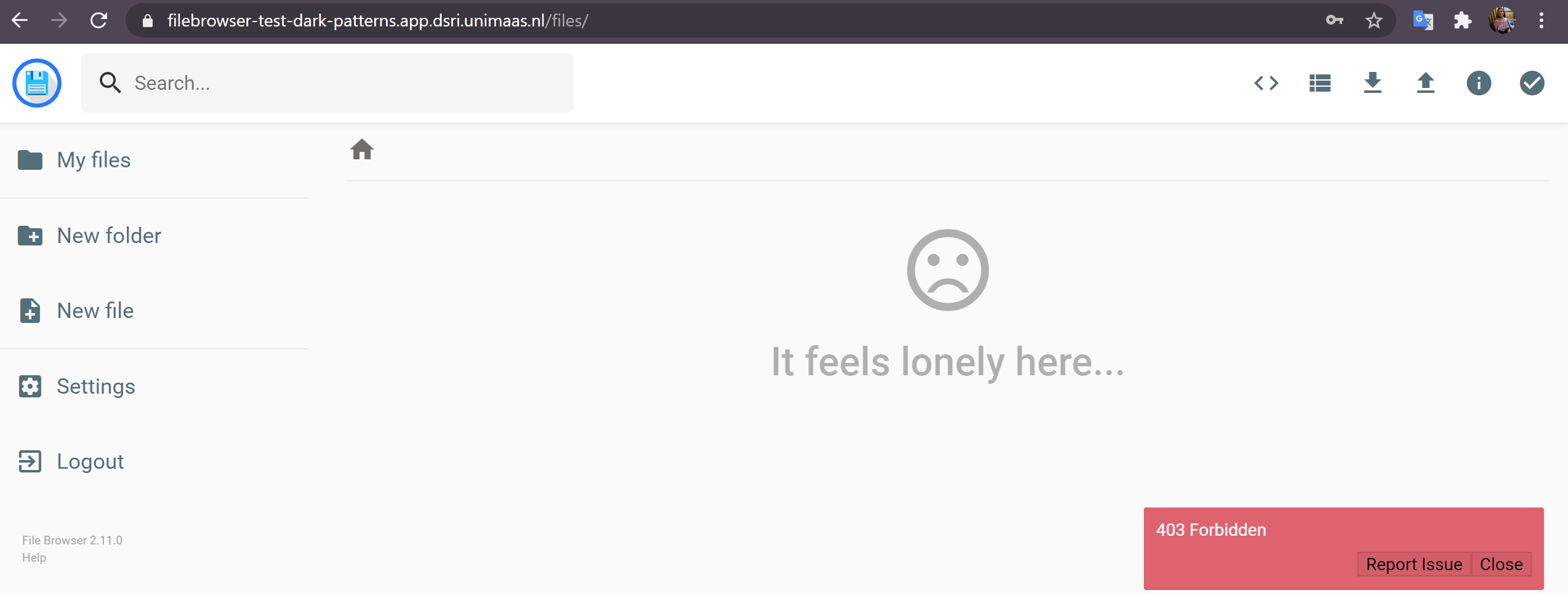

Filebrowser 403 forbidden

If you get 403 forbidden issue while try to upload folders / files or creating new folder / file

403 forbidden

Above issue will occur if you are not using the persistent storage.

A persistent storage can be created by the RCS team for a persistent storage of the data. Contact the RCS team to request a persistent storage.

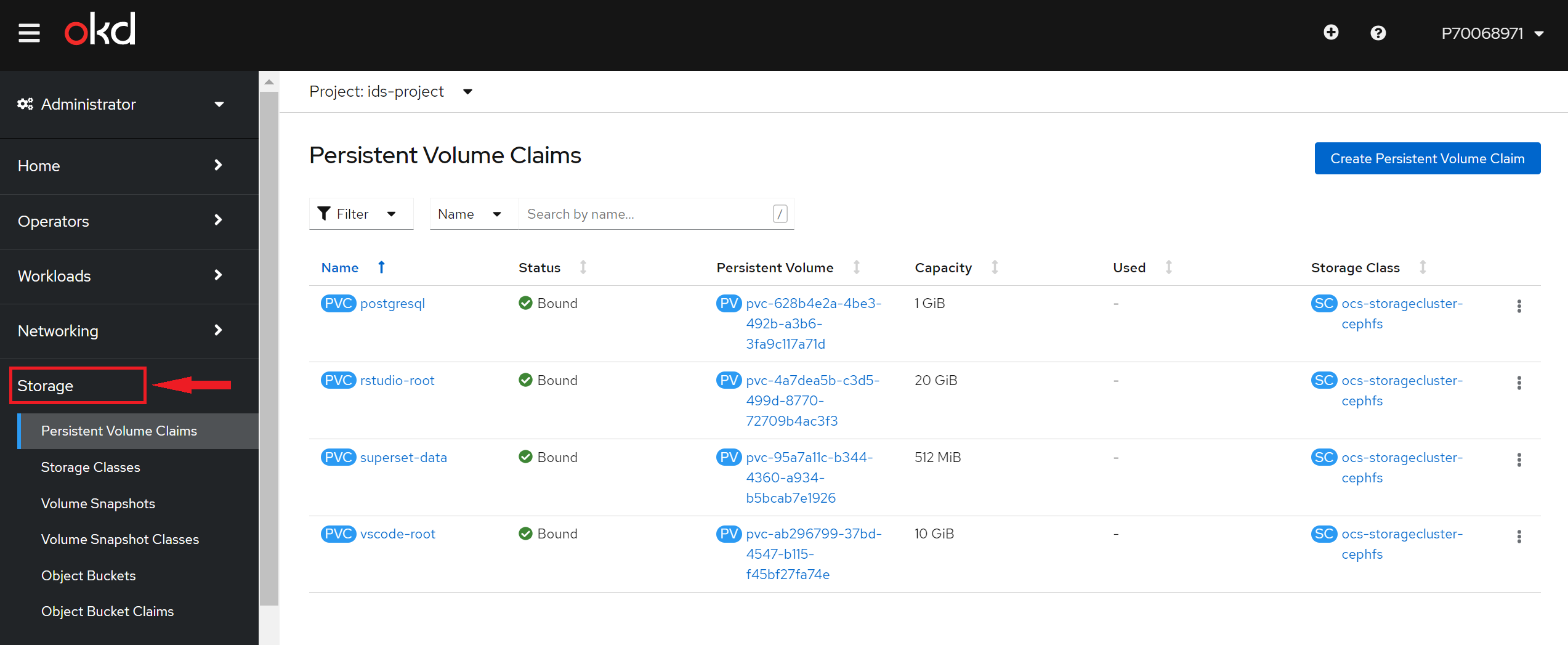

You can find the persistent storage name as below